Object Dependency Checking in Snowflake

Snowflake allows dropping of an object that has a view dependent on it. For example, create tables A and B, and view C that joins A and B together. Snowflake will allow you to drop table A or B, and when you attempt to use view C you will get an error. This behavior is fairly common for DBMSes.

It’s important to document and maintain dependencies using a combination of object (source) control, a rigorous testing regimen, and promotion protocols. That said, here’s a stored procedure to check object dependencies, specifically to see if all views are working. The stored procedure inventories all views in the Snowflake account and attempts to select a single row. If the row selection succeeds, it reports success for that view. If it fails, it reports the reason for the failure.

Note that running this stored procedure could take a very long time since it needs to select one row from every view in the account. This is a prototype procedure, and a future version will include the ability to limit the database and/or schema to test, probably using RegEx to enable pattern matching. This will allow testing of all views in the PROD database matching something like a prefix, since whatever change may be only likely to affect views in that database starting with a prefix.

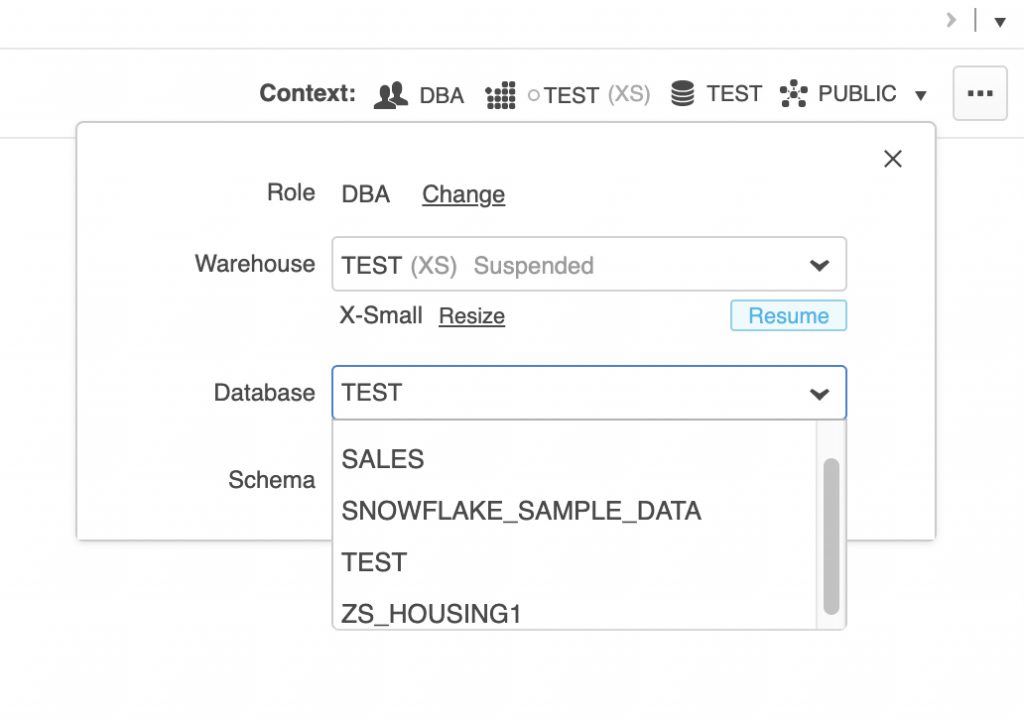

use warehouse TEST; -- An Extra Small warehouse is best for this test.

use role SYSADMIN; -- Could be any role except for ACCOUNTADMIN. The ACCOUNTADMIN will own the SP to test views and grant usage to this role.

use database TEST; -- Could be any database; just put one in context.

use role ACCOUNTADMIN; -- We need to create the stored procedure as ACCOUNTADMIN to ensure we have read access to all views.

/********************************************************************************************************************

Procedure: TestAllViews

Description: Executes as ACCOUNTADMIN and attempts to select a single row from every view in the account. For each

view in the account, it returns "Success" if it can read a single row, or "Failure" and the error

message if it fails trying to read a single row. This enables a quick sanity check of the views after

changing objects, such as dropping a table, view, or column.

Parameters: None.

Return: A string with each line the test result of each view.

Notes: This is a prototype SP. Future versions will have parameters to limit databases and schemas to check.

*********************************************************************************************************************/

create or replace procedure TEST.PUBLIC.TestAllViews()

returns string

language JavaScript

execute as OWNER

as

$$

var sql_command =

`select DATABASE_NAME from INFORMATION_SCHEMA.DATABASES;`;

var databaseArray = new Array();

try {

var stmt = snowflake.createStatement( {sqlText: sql_command} );

var resultSet = stmt.execute();

while (resultSet.next()) {

databaseArray.push(resultSet.getColumnValue('DATABASE_NAME'));

}

}

catch (err) {

return "Failed: " + err; // Return a success/error indicator.

}

var outString = '';

var index = 0;

var viewArray = new Array();

while (index < databaseArray.length) {

sql_command = "select TABLE_CATALOG, TABLE_SCHEMA, TABLE_NAME from " + databaseArray[index] +

".INFORMATION_SCHEMA.VIEWS where TABLE_SCHEMA <> 'INFORMATION_SCHEMA';";

try {

var stmt = snowflake.createStatement( {sqlText: sql_command} );

var resultSet = stmt.execute();

while (resultSet.next()) {

viewArray.push(resultSet.getColumnValue('TABLE_CATALOG') + "." +

resultSet.getColumnValue('TABLE_SCHEMA') + "." +

resultSet.getColumnValue('TABLE_NAME'));

}

}

catch (err) {

return "Failed: " + err; // Return a success/error indicator.

}

index++;

}

index = 0;

// We now have a complete list of views... Test them one at a time...

var outArray = new Array();

index = 0;

while (index < viewArray.length) {

sql_command = "select * from " + viewArray[index] + " limit 1;";

try {

var stmt = snowflake.createStatement( {sqlText: sql_command} );

var resultSet = stmt.execute();

outArray.push("Success..." + viewArray[index]);

}

catch (err) {

outArray.push("Failure..." + viewArray[index] + " : " + err.message.replace(/(\r\n|\n|\r)/gm, " - "));

}

index++;

}

index = 0;

while (index < outArray.length) {

outString += outArray[index] + "\n";

index++;

}

return outString;

$$;

grant usage on procedure TEST.PUBLIC.TestAllViews() to role SYSADMIN;

use role SYSADMIN;

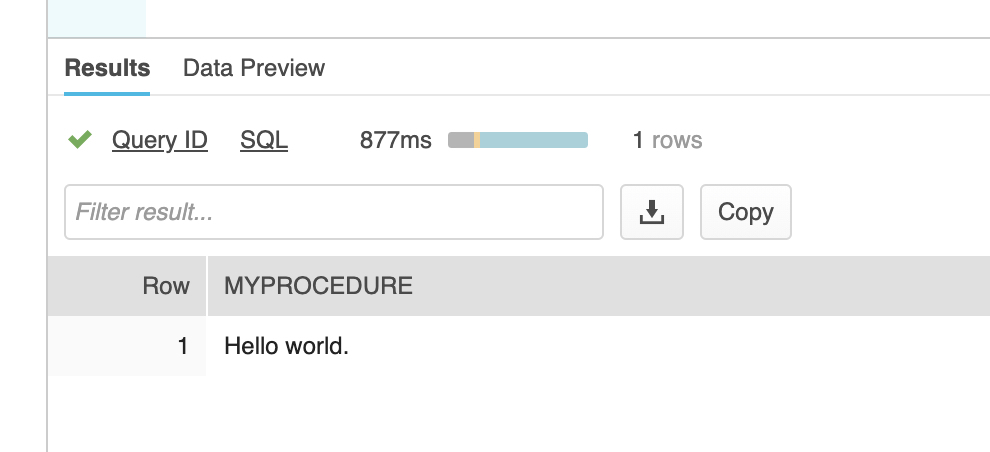

call TEST.PUBLIC.TestAllViews();

-- Note: Completing this SP could take a long time.

/*

The output should look something like this (note dropped table "TEST.VIEWTEST.A" to break view "TEST.VIEWTEST.C")

Success...CITIBIKE_BIG_V2.CLUSTERED.TRIPS_VW

Success...CITIBIKE_BIG_V2.CLUSTERED.TRIPS_WEATHER_VW

Success...CITIBIKE_BIG_V2.UNCLUSTERED.TRIPS_VW

Success...CITIBIKE_BIG_V2.UNCLUSTERED.TRIPS_WEATHER_VW

Success...SALES.PUBLIC.REVENUE

Success...SALES.PUBLIC.SALES_REVENUE

Success...SALES_05_31_19.PUBLIC.REVENUE

Success...SALES_05_31_19.PUBLIC.SALES_REVENUE

Success...TEST.AUDIT.NEW_VIEW

Failure...TEST.VIEWTEST.C : SQL compilation error: - Failure during expansion of view 'C': SQL compilation error: - Object 'TEST.VIEWTEST.A' does not exist.

Success...TEST.PUBLIC.ORDERS_SECURE_VIEW

Success...TEST.TPCH_SF1.ORDERS_SECURE_VIEW

Success...TEST_CHAR.PUBLIC.V_TEST_CHAR

*/