One of the thing needed to test Geolocating a weblog is, well, a weblog. As it turns out, this is not an easy thing to do. After some research, I located a partially obfuscated Apache web log from the US Securities and Exchange Commission (SEC) available here:

https://www.sec.gov/dera/data/edgar-log-file-data-set.html

Their weblogs obfuscate the final quad of the IPv4 dotted quad, so they look like this: 192.168.1.jjr.

According to their documentation, their method will always replace the final number from 0 to 255 with the same three-letter value. The SEC does not disclose that “jjr” maps to something like 134, but for the purposes of this test it’s acceptable to assign all 256 unique three letter replacements with numbers from 0 to 255.

After downloading the log file named “log20170630.zip”, we need to load it to a stage and use a file format to parse it. Here is the file format I used:

ALTER FILE FORMAT "TEST"."IP2LOCATION".IP_LOG SET COMPRESSION = 'AUTO' FIELD_DELIMITER = ',' RECORD_DELIMITER = '\n' SKIP_HEADER = 1 FIELD_OPTIONALLY_ENCLOSED_BY = 'NONE' TRIM_SPACE = TRUE ERROR_ON_COLUMN_COUNT_MISMATCH = TRUE ESCAPE = 'NONE' ESCAPE_UNENCLOSED_FIELD = '\134' DATE_FORMAT = 'AUTO' TIMESTAMP_FORMAT = 'AUTO' NULL_IF = ('\\N');

After loading the data into a table (I called mine IP_LOCATION), the next thing to do is replace the three-letter obfuscated replacements with numbers from 0 through 255. To do this, I ran an ELT process replace these values using these steps:

Step 1: Create a new table with all the columns from the original web log, plus a new column to hold the last three characters of the IP number (the three-letter replacement).

Step 2: Create a table with each of the distinct values in the new column. There will be 256 representing numbers from 0 through 255. For the purposes of this exercise, it is not important which numbers map to which three-letter replacements, so I assigned them in sequential order from 0 through 255 in the order returned in the select distinct query. I called these IP numbers simulated, even though only the final quad of the dotted quads is simulated.

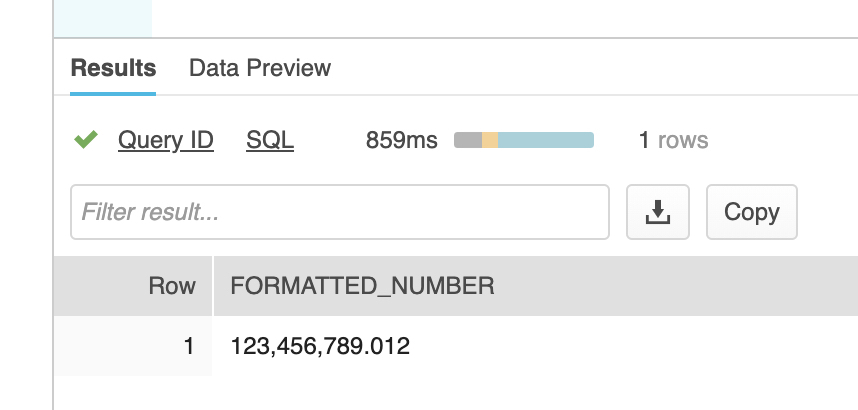

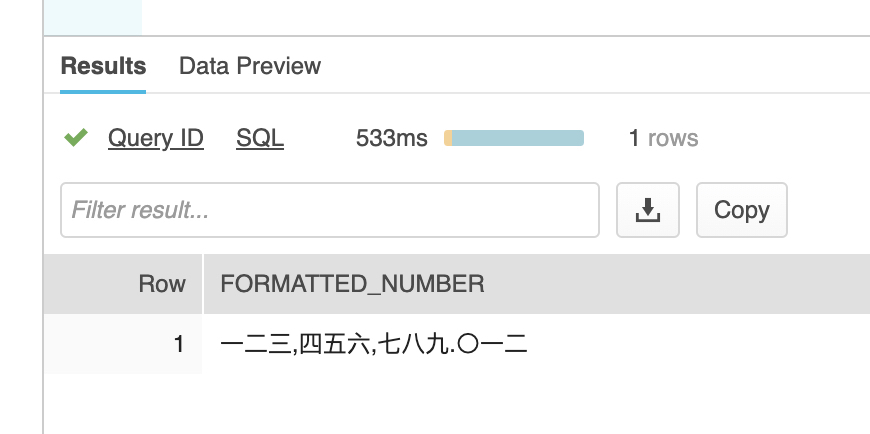

Step 3: Convert the IP dotted quads into IP numbers using the UDF in Part 1 of this series. The SQL looks like this:

create table IP_INT_LOG as(

select IpToInteger(SIMULATED_IP) as IP_NUMBER, IP, SIMULATED_QUAD, SIMULATED_IP, REQUEST_DATE, REQUEST_TIME, ZONE, CIK, ACCESSION, EXTENSION, CODE, SIZE, IDX, NOREFER, NOAGENT, FIND, CRAWLER, BROWSER from IP_LOG);

I was concerned about performance running a Javascript UDF millions of 20,488,579 times to convert IP dotted quads into IP numbers. It turned out I needn’t have been concerned. Snowflake converted all 20.5 million in 16.97 seconds using an Extra Small (XS) warehouse. Out of curiosity, I dropped the resulting table and increased the size of the warehouse to a medium (M) and it ran in 7.85 seconds. This provides empirical evidence that increasing the warehouse size improves performance including those with UDFs.

This led me to the final part of the experiment — resolving the IP numbers in the web log to the geolocations. We have the IP numbers in the a log table, and the IP geolocations in another. My first thought was to join the tables, but this is an unusual join. The standard join matches keys. In this example, we need to use inequalities to join the table. In other words, join the IP log information with the geolocation data where the IP log’s IP number falls between the lower and upper bounds of a locations address range.

select

L.COUNTRY_ABBREVIATION, L.COUNTRY, L.STATE_OR_PROVINCE, L.CITY, L.LATITUDE, L.LONGITUDE, L.POSTAL_CODE,

I.IP_NUMBER, I.IP, I.SIMULATED_QUAD, I.SIMULATED_IP, I.REQUEST_DATE, I.REQUEST_TIME, I.ZONE, I.CIK, I.ACCESSION, I.EXTENSION, I.CODE, I.SIZE, I.IDX, I.NOREFER, I.NOAGENT, I.FIND, I.CRAWLER, I.BROWSER

from IP_INT_LOG I, IP_LOCATION L

WHERE I.IP_NUMBER BETWEEN L.START_NUMBER AND L.END_NUMBER

limit 10;

Note: This procedure is missing one important change that dramatically improves performance. This will be the topic of a follow-on Part 3 of this post.