CHAR Padding in Snowflake

For strings that need to be fixed-width, it’s common to use the CHAR data type, for example CHAR(10). In this example, often databases will right pad a field has fewer than 10 characters with spaces.

If we define a CHAR(10) and store “ABCDE” in the field, some databases will add spaces on the right to fill the 10 width. In the example below, the “.” character represents the space:

Column_Name

1234567890

ABCDE.....

A side effect of the advanced way Snowflake stores strings is that a column defined as CHAR(10) with only 5 characters such as “ABCDE” will return only the five characters instead of 10 such as “ABCDE “. From the Snowflake documentation:

Snowflake currently deviates from common CHAR semantics in that strings shorter than the maximum length are not space-padded at the end.

https://docs.snowflake.net/manuals/sql-reference/data-types-text.html#char-character

For use cases where fixed-width of strings is important, there are a couple of options. One option is to right pad the strings on ingest, but this adds work and storage overhead.

I think a better way is to create a simple view that returns right padded strings for columns defined as CHAR. Fortunately, the Snowflake RPAD function makes this easy.

The following sample explains how to create a view to ensure fixed-width columns. Note that since CHAR is a synonym for VARCHAR, this technique will work with columns defined as CHAR, VARCHAR, STRING, CHARACTER, and TEXT.

--Create a simple test table with a CHAR(10)

create table TEST_CHAR

(

TEST_COLUMN char(10)

);

--Insert some values

insert into TEST_CHAR (TEST_COLUMN) values ('1234');

insert into TEST_CHAR (TEST_COLUMN) values ('12345');

insert into TEST_CHAR (TEST_COLUMN) values ('1234567890');

--Create a view that right pads the CHAR fields to width 10

--Change the space to another character to see the effect.

create or replace view V_TEST_CHAR as

select RPAD(TEST_COLUMN, 10, ' ') as TEST_COLUMN from TEST_CHAR;

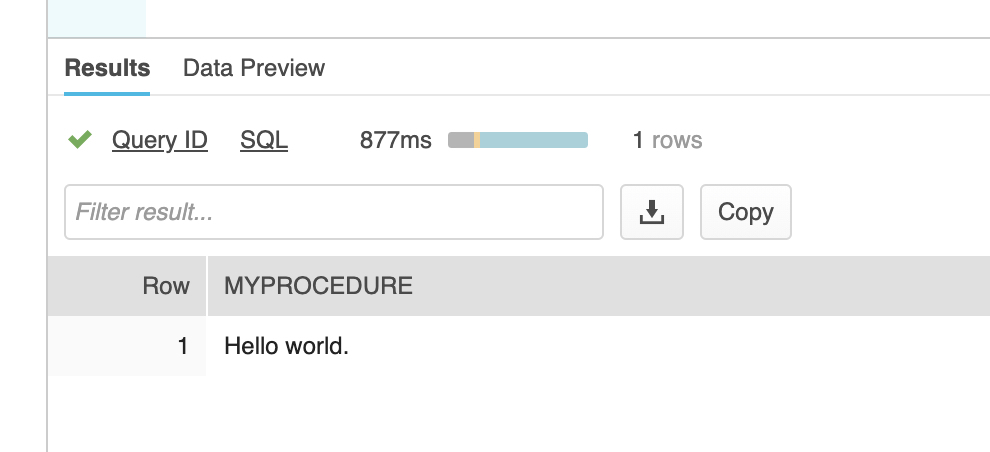

--Select from the view.

select * from V_TEST_CHAR;