Overloading JavaScript UDFs in Snowflake

Snowflake supports overloading user defined functions. It’s a great way to handle function calls with parameters of different data types or different numbers of parameters. Developers often overload functions to let users send only relevant parameters.

Consider the common SUBSTRING function. You can call it using one of two overloads:

select substr('Hello, world.', 8); --This returns "world."

select substr('Hello, world.', 8, 5); --This returns "world"

In the first statement, the caller sent the string to use and the start position. Omitting the final parameter uses the overload with default behavior, returning to the end of the string.

In the second statement, the caller decided to get rid of the final period. Adding the third parameter for length used the other overload to return five characters instead of the default behavior.

This is a common design with overloaded functions. Mandatory parameters go on the left and optional parameters follow. Each allowable combination of parameters becomes an overload of the function. In this design, developers typically write one base function with all parameters. For the overloads with missing parameters, they’ll call the base function using a default value for the missing parameter(s).

This design ensures that there’s only one place to maintain and debug code. The problem is Snowflake JavaScript UDFs cannot call other UDFs. While one way to deal with this is to write the same code in all overloads, it means three places to maintain, improve, and debug code. Fortunately, there’s a way to write once base function and call it from overloaded functions using defaults.

The solution is to write the base UDF with all parameters in JavaScript. For the overloads that simply call the base function using defaults for missing parameters, call the base JavaScript UDF using an overloaded SQL UDF. This works because SQL UDFs can call other UDFs, which JavaScript UDFs cannot do.

In this example, the JavaScript UDF returns a column displaying a graphical progress bar. The opens are typical for progress bars: percent completion, number of decimal places to display on the percentage, and number of segments to display.

The only one that can’t be defaulted is the percent complete. It’s okay to default to two decimal points and ten segments.

-- Make a progress bar function that looks like this: ⬛⬛⬜⬜⬜⬜⬜⬜⬜⬜ 24.53%

-- This is the main JavaScript function with all parameters.

create or replace function PROGRESS_BAR(PERCENTAGE float, DECIMALS float, SEGMENTS float)

returns string

language javascript

as

$$

var percent = PERCENTAGE;

if (isNaN(percent)) percent = 0;

if (percent < 0) percent = 0;

if (percent > 100) percent = 100;

percent = percent.toFixed(DECIMALS);

var filledSegments = Math.round(SEGMENTS * (percent / 100));

var emptySegments = SEGMENTS - filledSegments;

var bar = '⬛'.repeat(filledSegments) + '⬜'.repeat(emptySegments);

return bar + " " + percent + "%";

$$;

-- This is an overload with only the percentage, using defaults for

-- number of segments and decimal points to display on percentage.

create or replace function PROGRESS_BAR(PERCENTAGE float)

returns string

language sql

as

$$

select progress_bar(PERCENTAGE, 2, 10)

$$;

-- This is an overload with the percentage and the option set for the

-- number of decimals to display. It uses a default for number of segments.

create or replace function PROGRESS_BAR(PERCENTAGE float, DECIMALS float)

returns string

language sql

as

$$

select progress_bar(PERCENTAGE, DECIMALS, 10)

$$;

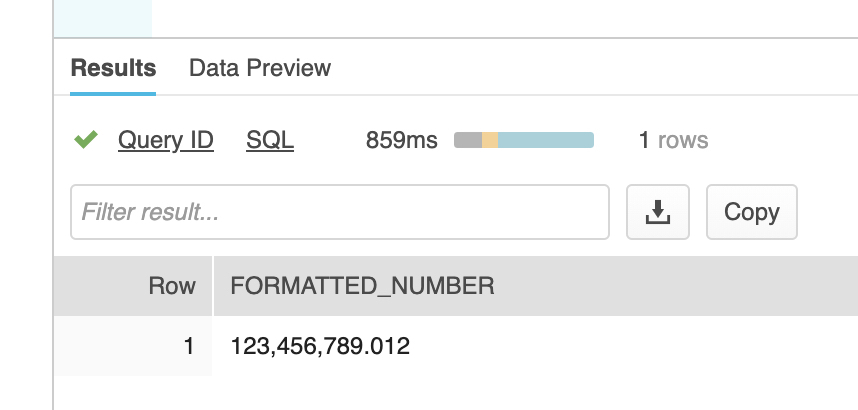

-- Call the main JavaScript function by sending all three parameters:

select progress_bar(24.5293, 0, 100) as PROGRESS;

-- Call the overloaded SQL function by omitting the number of segments (segments defaults to 10):

select progress_bar(24.5293, 1) as PROGRESS;

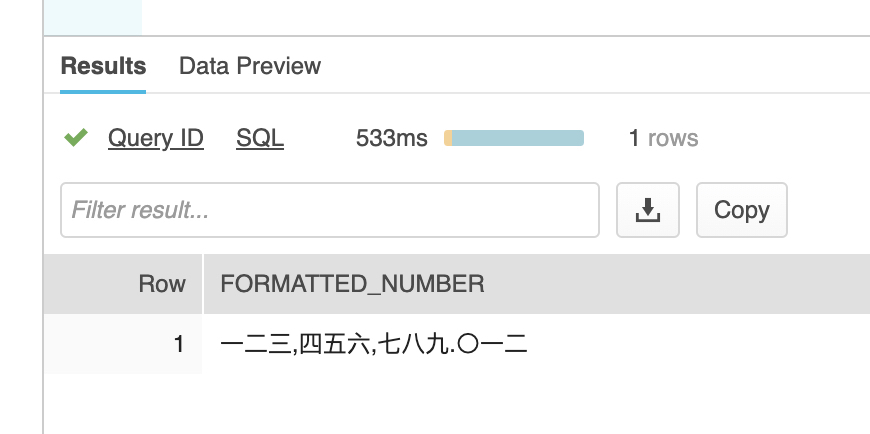

-- Call the overloaded SQL function specifying only the percentage

-- (segments defaults to 10 and decimals to 2)

-- It should display like this: ⬛⬛⬜⬜⬜⬜⬜⬜⬜⬜ 24.53%

select progress_bar(24.5293) as PROGRESS;

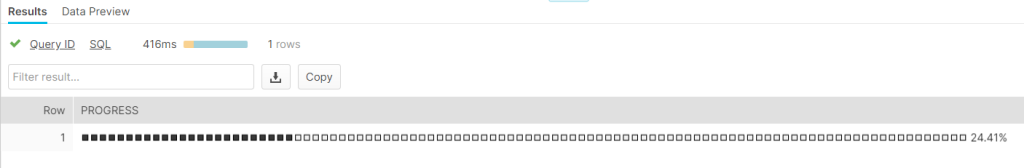

By the way, this UDF progress bar is fully functional. If you have a long-running process such as loading a large number of files, you can use it to monitor progress by refreshing the query periodically. Here’s an example using a long progress bar and 24.41%:

select progress_bar(24.41, 2, 100) as PROGRESS;