Preserving Table Change History Indefinitely

Snowflake supports Time Travel and Stream retention up to 90 days. What if you need to preserve the history indefinitely? You can do that by making a Zero Copy Clone at intervals shorter than the Time Travel retention period.

Suppose Finance sends you occasional corrections to a table containing sales records. For a handful of records, UPDATE statements correct the figures. For large-scale corrections you use MERGE operations. The table now contains the corrected figures, but what if for audit and reporting purposes you need to know the values as they existed before correction?

Time Travel allows this for up to 90 days, but we can keep that history indefinitely by making Zero Copy Clones. Here’s an example:

-- Snowflake's Zero Copy Cloning preserves table history for up to 90 days.

-- To preserve change history indefinitely, create a Zero Copy Clone

-- more frequently than the time travel retention period.

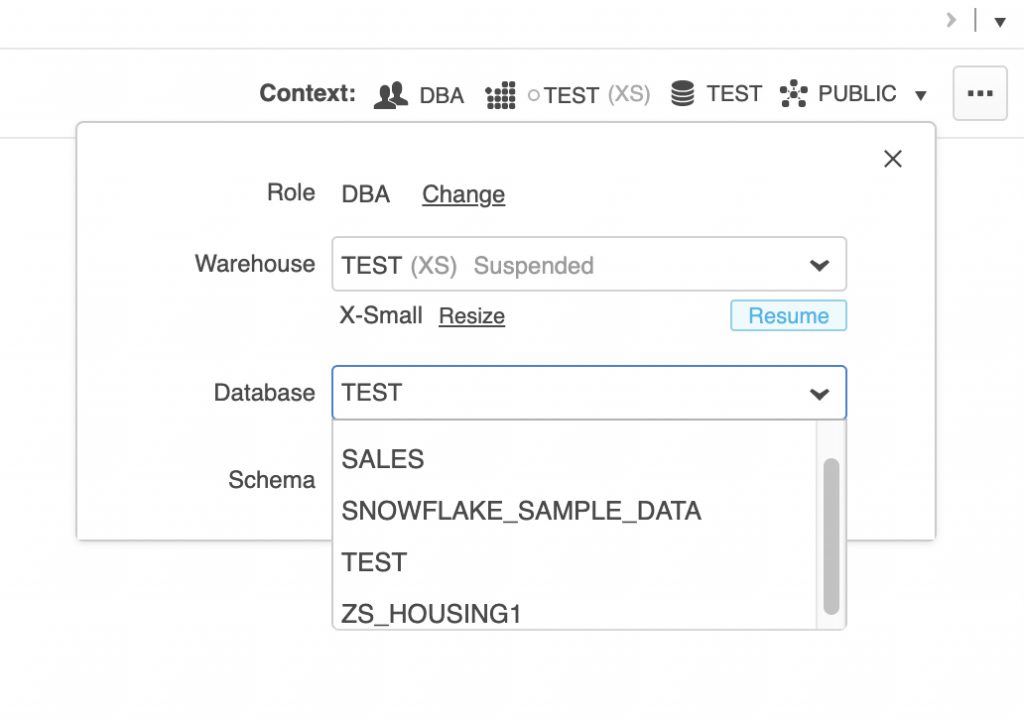

use role sysadmin;

use database TEST;

use schema SALES;

use warehouse TEST;

-- First, check how long Snowflake is set to preserve Time Travel data for the table

select RETENTION_TIME from INFORMATION_SCHEMA.TABLES where TABLE_NAME = 'SALES_FACT';

-- If necessary, increase the duration so you can clone it before Time Travel data rolls off.

alter table SALES_FACT set data_retention_time_in_days = 30;

-- Optionally, create a new schema to keep the historical clones. This will keep the base table

-- schema cleaner.

create schema if not exists SALES_FACT_HISTORY;

-- Run the remaining section as frequently as required to get the needed granularity of history

-- preservation. For critical tables in regulated industries, it may be necessary to create

-- a clone after every UPDATE or MERGE performed.

-- You'll need to create a dynamic table name, usually based on date.

set CLONE_TABLE_NAME = 'SALES_FACT_HISTORY.SALES_FACT_CLONE_' || to_varchar(CURRENT_DATE, 'YYYY_MM_DD');

-- Optionally check the name we'll use for the table

--select $CLONE_TABLE_NAME;

-- Use the identifier() SQL function to use a SQL variable as an object name.

-- Run this statement once a month, once a week, or any duration more frequent than the

-- table's Time Travel retention period.

create table identifier($CLONE_TABLE_NAME) clone SALES_FACT;

When preserving change history, there’s an important consideration for highly-regulated data. Time Travel and Streams preserve a complete record of every change to a table. Creating a clone preserves the state only at the time it’s cloned. What does this mean in practice?

Suppose you clone a table once a day at midnight. Months later, Finance sends revised sales figures. You update the rows quickly. A few hours later, Finance notices a miscalculation and sends corrected values. When you apply updates to the same rows twice that day, the clone at midnight will reflect only the later change. For most data, this is probably fine. For highly regulated data, it may not be acceptable. In this case, you’ll need to perform a clone after every update or merge that updates rows.